Doing Research in Practice

This chapter is based on the article Doing research in practice: some lessons learned, XRDS 20, 4 (June 2014), 15-17.

System/360 Model 91 Panel at the Goddard Space Flight Center (the image was taken by NASA sometime in the late 60s). Fred Brooks managed the development of IBM’s System/360 family of computers and the OS/360 software support package. He later wrote about the lessons learned in his seminal book The Mythical Man-Month.

System/360 Model 91 Panel at the Goddard Space Flight Center (the image was taken by NASA sometime in the late 60s). Fred Brooks managed the development of IBM’s System/360 family of computers and the OS/360 software support package. He later wrote about the lessons learned in his seminal book The Mythical Man-Month.

In the previous post I argued that, due to extensive tacit and intuitive knowledge and skills, practitioners may be able to acquire unique understandings. In this chapter I want to argue that the knowledge you acquired through practice is of limited value if your learning process is not disciplined. Our experiences differ a lot, and our abilities to communicate and understand it are also different. Practice-based research is very limited if it is not accompanied with the following features: prepared mind, systematic documentation, generalization, evaluation and iterations [1].

Prepared Mind. Louis Pasteur famously noted, “chance only favors the mind which is prepared.” Pasteur was speaking of Danish physicist Oersted and the almost “accidental” way in which he discovered the basic principles of electro-magnetism. He elaborated that it is not during accidental moments that an actual discovery occurs: The scientist must be able, with prepared mind, to interpret the accidental observation and situate the new phenomena within the existing work. Similarly, our learning in practice is limited without awareness about the context and existing solutions. We may build the wrong solution, or waste our time by “reinventing the wheel,” instead of exploiting existing work, available, theories and empirical results. Brooks similarly agued that practitioners should know exemplars of their craft, their strengths and weaknesses, concluding that originality is no excuse for ignorance [2]. Furthermore, if we are not able to connect our observations to a broader context and existing work we may not be able to judge the relevance and importance of our observations and we may miss the opportunity to make some important discovery.

Systematic Documentation. To support research, your practical experiences should be documented. We keep forgetting things, and our memory changes over time. Keeping systematic documentation also enables retrospective analyses and discovery of new findings even after our projects finish. It is important to document all-important decisions, describing the limitations and failings of the design, as well as the successes, both in implementation and usage. Of particular value is documenting the rationale for our decisions, describing why we did something, not only what we have done. This is not easy, as it often requires making explicit the elements practitioners use intuitively. But such documentation is extremely valuable, especially for others, to understand why some things worked well or not.

Sharing and Generalization. In a normal design effort the primarily goal is to create a successful product, and lessons learned are restricted to the particular design and the people involved in it. To be useful to others, some effort has to be invested in generalizing these lessons. Generalization enables correlating different experiences, which otherwise may look too specific.

Knowledge obtained from practice can be viewed as a generalization of experiences. In a normal design effort the primarily goal is to create a successful product, and lessons learned are restricted to the particular design and the people involved in it. To be useful to others, some effort has to be invested in generalizing these lessons.

Generalization enables correlating different experiences, which otherwise may look too specific. In the process of generalization, practitioners need to expand their focus beyond the current design situation, viewing the design problem, solutions, and processes as instances of more general classes. For each of the collections of lessons learned as discussed in the previous section, we may identify the corresponding type of generalizations (see the chapter Design-Based Research): domain theories as generalizations of problem analysis, design frameworks or patterns as generalizations of design solutions, and design methodologies as generalizations of design procedures.

However it is also important not to “over-generalize.” Practice usually cannot provide us with insights to develop “grand” and universal theories. Rather, emphasis should be between narrow truths specific to some situation, and broader knowledge covering several similar situations.

Evaluation. Evaluation is a necessary part of any learning process. This is especially true for domains, such as software engineering or human-computer interaction, where we have to deal with complex human and social issues for which we do not have strong theories, models and laws. By evaluation I do not necessarily mean formal evaluation activities conducted in “laboratories” (even thought these may be used sometimes). I see evaluation as a systematic effort to get feedback on our findings and quality of our work that is not based on our intuition. Even simple techniques, such as peer reviews may be incredibly efficient in identifying shortfalls in our problem analysis, the solution, and the design procedure. In order to enable such a process, practitioners need to be prepared to make their reasoning explicit, public, and open to critical reflection and discussion. The key is to make your intuitive decisions more explicit and “vulnerable” to the critique of others and empirical findings.

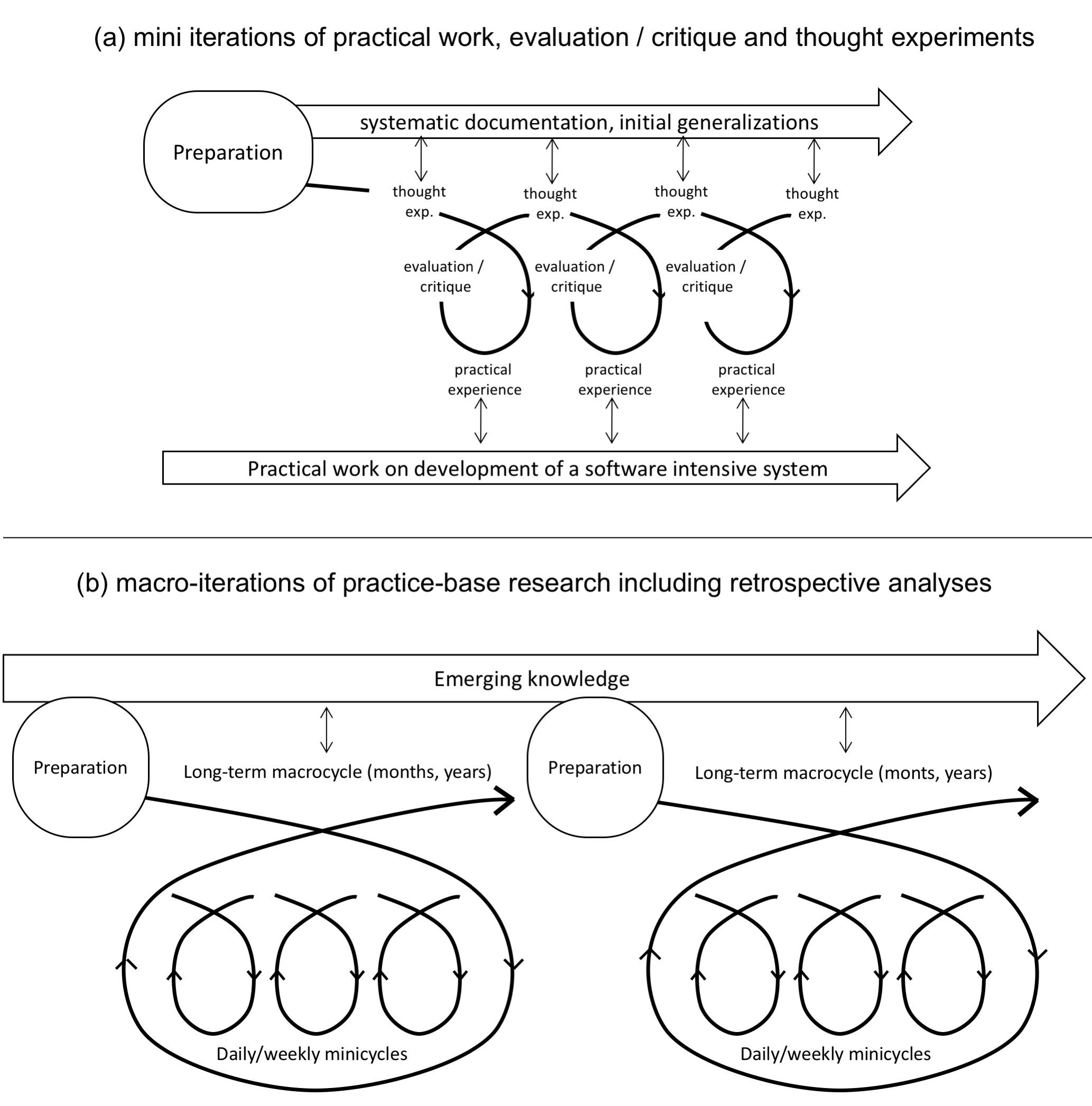

Iterations. Lastly, to maximize learning, all of the previous elements should be applied in a number of iteration. While a single event can have an impact, it usually takes many events to extract general features and generate rules from experience. In everyday work, you should try to combine elements of preparation, actual practical work, and evaluation (see Figure 1a).

At the begin of each learning cycle you should spend some time preparing for you actions, reflecting on previous actions and hypothesize about how you expect that your new actions will affect outcomes. I call this phase a “thought experiment.” In this phase, it is important to make explicit practitioners’ expectations and assumptions, so others can understand and evaluate them. After actual practical work, there should be an evaluation where outcomes of your actions are reviewed in the light of their original assumptions. Results of evaluations can further improve your understanding, and serve as a basis for new cycles. Ideally, this process should also include a preparatory research, where practitioners collect as much information about existing work as possible at the beginning, and make their goals explicit and clear. This model may be too idealistic for practical work. However, I find this model a very useful guide to structure and add some discipline to everyday work, and in that way maximize learning from practice and obtain knowledge that may be interesting for the research community.

Learning should not stop at the end of a project. New insights and broader generalizations will often occur through retrospective analyses of lessons learned and data collected through a whole project. Brooks, for example, spent several years analyzing and reflecting on the lessons learned in design of OS/360, producing the influential The Mythical Man-Month [3]. These macro-iterations of retrospective analyses can also happen on a broader scale, covering several projects from different contributors. For example, in special issues of journals, editors often spend some time summarizing and generalizing findings from individual articles.

References

-

Edelson, D.C. Design research: What we learn when we engage in design. Journal of the Learning Sciences 11, 1 (2002), 105–121.

-

Brooks, F.P. The Design of Design: Essays from a Computer Scientist. Addison-Wesley Professional, 2010.

-

Brooks, F.P. The Mythical Man-Month. Addison-Wesley Professional, 1995.