The Four Points of the Research Compass

This essay is based on the article The four points of the HCI research compass, interactions 20, 3 (May 2013), 34-37.

The Flight Director Attitude Indicator (FDAI) of the Apollo Guidance Computer (AGC). Wikimedia Commons.

The Flight Director Attitude Indicator (FDAI) of the Apollo Guidance Computer (AGC). Wikimedia Commons.

Introduction

Most discussions about research in computer sciences (CS) focus on research methods and skills, on the question of how research should be conducted (e.g., [1]). Research tools and methods, however, are only passive instruments in the hands of motivated researchers. But what exactly motivates and drives CS researchers? Here I address this question, arguing that we need to be more thoughtful about our research motivation, not just our research skills.

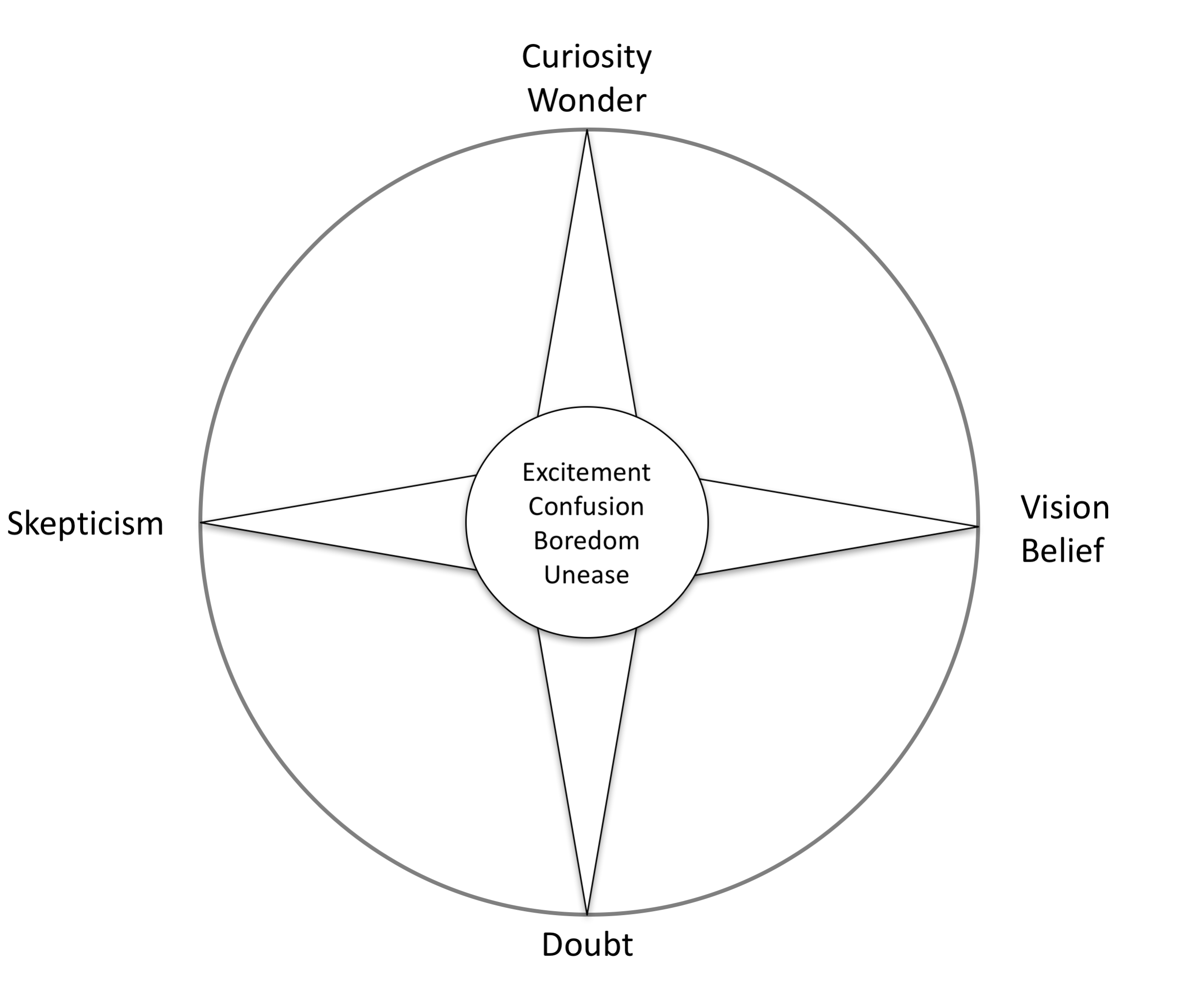

Research skills can help us to do the research properly, but research motivation is the main force behind all of our research efforts. To support this discussion, and inspired by recent ideas from philosophy [2], I use the metaphor of a compass to discuss research motivation on the meta level, independent of the research methods being used. Using this metaphor I present a new, higher-level view on CS research as being driven by four main motivators (Figure 1):

- Curiosity and wonder, where we follow our strong interests and desires to learn new things;

- Doubt, where we want to obtain deeper and more certain understanding;

- Belief and vision, where we set or follow research ideals; and

- Skepticism, where we question the possibility of reaching some research goals.

I argue here that these four directions are legitimate motivators for doing research, and that we need to support efforts in all of them. Indeed, each of these motivators has positive and negative sides, and awareness of the pros and cons can help us to do better research. I also contend the main question that we as a community need to answer is not which of these directions to follow, but rather, what is the right balance among contributions motivated by all four sides. I illustrate each of these motivators with concrete examples from the CS field.

Curiosity and Wonder

As the simplest and the most obvious point of the research compass, curiosity and wonder describe the natural characteristic of researchers to have a strong interest in and eagerness to know more about a topic. Many CS research contributions came from researchers being fascinated by or curious about some issue, including technology, people, or interaction between technology and people. Curiosity and wonder are also closely connected to academic freedom. Brad Myers emphasized the pivotal role of creative and curious university researchers in the advancement of the human-computer interaction (HCI) field [3].

While curiosity and wonder are the driving force behind innovations, they alone are not sufficient for a research contribution. If overemphasized, they may even produce negative effects. For instance, they may prevent us from obtaining more depth in our research. As noted by Saul Greenberg and Bill Buxton, the CHI conference sometimes favors innovative and more “curious” solutions at the price of more “doubting” ones [4]. They argued that contributions that reexamine existing results are seen as “replications,” non-original contributions that are not valued highly, and when they are reviewed the typical referee response is “it has been done before; therefore, there is little value added.” In addition, as we are working in a discipline that studies people, we may need to restrain our curiosity due to a number of sensitive ethical issues.

Doubt

When we make some discovery we may ask ourselves if our findings are wrong, coincidental, or a result of wishful thinking. Such questions are the beginning of doubt, one of the most important motivators behind research. Any evaluation can be viewed as an effort to reduce doubts about our findings. Experimental evaluation, for example, doubts claims in the form of a null hypothesis, a claim that an observed phenomena has nothing to do with our intervention. We need to invest effort to disprove the null hypothesis, thereby reducing the doubt that our findings are accidental. Ethnographic methods and techniques, such as protocols for interviews, recordings, and analytic frameworks, such as grounded theory, add rigor and discipline to studying complex social phenomena. In this way, they are forcing us to systematically document and analyze observed phenomena, adding certainty to our observations and conclusions. Similarly, by publishing our articles in peer-reviewed venues, we subject our findings to the doubt of expert peers.

As a research motivator, doubt is primarily a positive force. Contrary to skepticism, doubt does not question the possibility of knowing something or the validity of pursuing some direction. When we doubt some finding, we want to set it on firmer ground and add more certainty to it. Shumin Zhai nicely argued for this in his discussion of the importance of evaluation in HCI:

“It is the lack of strong theories, models and laws that force us do evaluative experiments that check our intuition and imagination. With well-established physical laws and models, modern engineering practice does not need comparative testing for every design. The confidence comes from calculations based on theory and experience. But lacking the ability to do calculations of this sort, we must resort to evaluation and testing, if we do not want to turn HCI into a ‘faith-based’ enterprise” [5].

Too much doubt also has its disadvantages; for example, it can lead to situations in which we work only on minor improvements that can be easily tested, but do not produce enough innovation. This topic has been a subject of discussion in the CHI community for years (e.g., [4,5]).

Vision and Belief

While the term belief may have a negative connotation in the scientific world because of its vague definition and association with religion, it is difficult to imagine any research activity without some form of belief or guiding vision. We normally believe in the importance of doing research in our domain (and hope that funding agencies share our belief) even without having strong evidence about the value of doing such research (yet). The value of “fundamental research,” for example, may become evident only after a long period, if ever. In the field of HCI, Buxton talked about the long cycle of innovation, noting that it may take several decades for a research innovation to become valuable in practice:

“The move from inception to ubiquity can take 30 years. … The first prototype of a computer mouse appeared as a wooden box with two wheels on it in the early 1960s, about 30 years before it achieved the level of ‘ubiquity’“[6].

Looking at the larger scale, behind many subfields of computer science and HCI we may find a few visionary contributions that have driven and inspired other researchers. Many core ideas in HCI are inspired by Vannevar Bush’s “memex” paper [7], J.C.R. Licklider’s vision of networked IT in the 1960s, and Douglas Engelbart’s NLS (online system) demonstration at the Fall Joint Computer Conference in San Francisco in December 1968 [8]. Douglas Engelbart received the ACM Turing Award in 1997 for “an inspiring vision of the future of interactive computing and the invention of key technologies to help realize this vision”. Don Norman’s book The Psychology/Design of Everyday Things practically defined a new domain—user-centered design—inspiring thousands of HCI contributions (Google Scholar citation count close to 10,000) [9]. Similarly, Mark Weiser’s article “The Computer for the 21st Century” has been an inspiration for thousands of contributions (Google Scholar citation count above 9,000) [10]. Visionary contributions can also be a result of a community effort. Communications of the ACM, for instance, published a number of special issues introducing a shared vision for many of the HCI subfields, including perceptual UIs (2000), attentive UIs (2003), and organic UIs (2008). Similar roles may be played by workshops or events such as Dagstuhl Seminars. Such initiatives serve an important role in outlining or consolidating a new field, defining its basic terminology, and setting a high-level research agenda.

Vision is a very important component of any community effort, as shared vision can inspire researchers and enable synergic development of the field. Such vision can also come from the outside—for example, from funding agencies. The European Commission (EC), within its Framework Programs, defines themes and “challenges,” such as “pervasive and trusted network and service infrastructures” and “learning and access to cultural resources,” which are used to guide and prioritize the funding of research projects. Similarly, the U.S. National Science Foundation (NSF) has a number of core programs aimed at stimulating and guiding research in particular directions, such as “human-centered computing” and “robust intelligence.”

On an individual level, having a personal vision can help us to define our research line and identity. A common component of the academic job application, for example, is the research statement, in which applicants are expected to express the future direction and potential of their work and propose a valuable, ambitious, but realistic research agenda. For personal development, it is important to continuously work on the personal vision, and to make it explicit and open to critical reflection and discussion with mentors and colleagues.

Too much reliance on the vision, on the other hand, may have some negative consequences. While vision may inspire and guide research, vision makes sense only if it is followed by a number of curious and doubting contributions. If we get too excited about the vision we are following, we may become less critical about our findings. This can lead to confirmation bias, a tendency to favor information that confirms our beliefs or hypotheses. Vision can guide us in the wrong direction. We may also end up with visions that are too narrow. This may lead to overspecialization and to situations in which we are blind to innovative solutions because they are beyond the scope of any of the currently active visions. In addition, to be useful, vision should be based on deep knowledge and understanding of the research field, not on its ignorance.

Vision and belief are much more complex research motivators than curiosity and wonder. When we are driven by curiosity, we simply follow interests and the desire to learn something new. Vision and belief, on the other hand, require longer-term commitment to some idea, as well as constant effort to focus and organize research activities.

Skepticism

Skepticism is a loaded term with a number of definitions. Closest to the meaning I use here is the definition of skepticism as “doubt regarding claims that are taken for granted elsewhere” [11]. I view research skepticism in a similar fashion, as a reality checker that questions the fundamental premises we normally take as a given. As such, skepticism can call attention to the viability, feasibility, or practicality of a research direction or approach. Contrary to doubt, which can motivate us to further investigate some topic to obtain more certainty, skepticism may call us to abandon some line of inquiry and consider alternatives.

Fred Brooks’s paper “No Silver Bullet—Essence and Accidents of Software Engineering” is probably one of the best examples of useful skeptical thought in computer science [12]. Brooks expressed his skepticism toward approaches to software engineering research that aim to discover a single solution that can improve software productivity by an order of magnitude. Brooks seriously questioned the possibility of ever finding such “startling breakthroughs,” arguing that such solutions may be inconsistent with the nature of software. Brooks also made clear that his skepticism is not pessimism. While Brooks questioned the possibility of finding a single startling breakthrough that will improve software productivity by an order of magnitude, he believed that such improvement can be achieved through disciplined, consistent effort to develop, propagate, and exploit a number of smaller, more modest innovations. In “Human-Centered Design Considered Harmful,” Norman was skeptical about naive approaches to human-centered design (HCD), stating that HCD has become such a dominant theme in design that interface and application designers now accept it automatically, without thought, let alone criticism [13]. The Greenberg and Buxton paper “Usability Evaluation Considered Harmful (Some of the Time)” provides a similar skeptical view on the HCI practice, encouraged by educational institutes, academic review processes, and institutions with usability groups, which promote usability evaluation as a critical part of every design process [4]. Based on their rich experiences, they argued that if done naively, by rule rather than by thought, usability evaluation can be ineffective and even harmful.

Skepticism can be a useful antidote to too much excitement or opportunism in doing research. Skeptical contributions, if well argued, can prevent the wasting of energy and resources in pursuing wrong directions and stimulate us to rethink our approach. The same applies on the individual level. Having curious and enthusiastic students guided by experienced and more skeptical mentors is a proven and very successful model for educating researchers.

Too much skepticism, on the other hand, comes with negative side effects. Chris Welty nicely described this problem as what he called “unimpressed scientist syndrome.” In his keynote speech at the 2007 International Semantic Web Conference, Welty portrayed his personal history of strong skepticism toward many computing innovations that later become very successful, including email, the World Wide Web, and the Semantic Web [14]. He argued that this may be a wider problem, and that many academic researchers are skeptical by rule rather than by thought, rejecting innovative solutions without serious consideration with phrases such as “I’ve seen this before; this is not gonna work.” Furthermore, if skepticism is not a well-argued result of long experience, it may trigger an emotional debate without contributing much to it. Similar to vision, useful skepticism requires deep knowledge and a fundamental understanding of the research field. It is not surprising that many skeptical authors are also authors of influential visionary contributions.

Skepticism is probably one of the most complex influences on research. Contrary to doubt, which can rely on a number of tools and methods (e.g., experiments, ethnographical methods), there are no simple and structured tools for skepticism. Useful skepticism requires careful thought, experience, and an excellent overview of the field.

Conclusion

The four points of our research compass metaphor do not suggest that research contributions should be motivated by only one direction. Even individual contributions usually combine several elements, presenting our discoveries (curiosity), for instance, with their evaluations (doubt). In a team it is good to have individuals with different affinities. At a community level, it is equally important to have contributions motivated by all four points. The community cannot develop without new ideas and new visions, but without a healthy dose of doubt and skepticism, we can get incorrect results or go in a faulty direction. It is also the responsibility of the community to set high standards and maintain the right balance among contributions originating from different research motivations.

I hope the research-compass metaphor can help researchers to be more thoughtful about their professional development and stimulate them to ask themselves questions such as:

Are we curious enough about topics of our research? Do we explore enough or do we jump too quickly to tests? Do we have a plan to maintain our curiosity, such as a sabbatical leave?

- Do we have vision about where we would like to go, or are we simply following the latest trends?

- Do we doubt our findings enough and are we using the right methods?

- Are we skeptical enough about our own work? Are we too skeptical as reviewers? Are we more skeptical toward some contributions and less toward others?

I believe that answering these questions can make us more thoughtful about our motivation and enable us to make more informed decisions about our development as researchers, as well as about the development of our research field.

References

-

Lazar, J., Feng, J.H., and Hochheiser, H. Research Methods in Human-Computer Interaction. John Wiley & Sons, 2010.

-

Alexander, J. The four points of the compass. Philosophy 87, 1 (January 2012), 79–107.

-

Myers, B.A. A brief history of human-computer interaction technology. interactions 5, 2 (March 1998), 44–54.

-

Greenberg, S. and Buxton, B. Usability evaluation considered harmful (some of the time). Proc. of CHI ‘08. ACM, New York, 2008, 111–120.

-

Zhai, S. Evaluation is the worst form of HCI research except all those other forms that have been tried, 2003

-

www.businessinnovationfactory.com/iss/innovators/ bill-buxton

-

Bush, V. As we may think. The Atlantic Monthly 176, 1 (July 1945), 101–108.

-

Canny, J. The future of human-computer interaction. Queue 4, 6 (July 2006), 24–32.

-

Norman, D.A. The Design of Everyday Things. L. Erlbaum Assoc., Inc., Hillsdale, NJ, 1988.

-

Weiser, M. The computer for the 21st century. Scientific American 265, 3 (September 1991), 94–104.

-

http://en.wikipedia.org/wiki/Skepticism

-

Brooks, F.P. No silver bullet—Essence and accidents of software engineering. IEEE Computer 20, 4 (April 1987), 10–19.

-

Norman D.A. Human-centered design considered harmful. interactions 12, 4 (July 2005), 14–19.

-

http://videolectures.net/iswc07_welty_hiwr/